One of the purposes of the Tony Little Centre for Innovation and Research in Learning (CIRL) at Eton is to make sure that the school is keeping abreast of developments and innovations in education. Innovation typically seeds out of sight to meet a niche need; if it later finds a mainstream application, it is likely to be well-formed and possibly either a benefit or a potential threat. To future-proof our current success we need to be paying attention to the most significant innovations and to make sure that we see change coming.

Artificial Intelligence (AI) is one such innovation. AI is already impacting on the kinds of careers many Etonians go into, including finance, medicine, law and the military. It is also beginning to impact on teaching: on how teachers prepare materials, organise learning spaces, present material to engage students, assess and give feedback, prepare for terminal assessments and write reports. At the moment AI is at a rudimentary level in education, but we can be sure it will not stay that way.

Precisely how teachers and AI will interact is yet unclear. One group of experts in AI in education (AIEd) argues:

We do not see a future in which AIEd replaces teachers. What we do see is a future in which the role of the teacher continues to evolve and is eventually transformed; one where their time is used more effectively and efficiently, and where their expertise is better deployed, leveraged, and augmented.[1]

Intelligence Unleashed. An argument for AI in Education

Eton is currently trialling several forms of Educational Technology (EdTech). We generally start small and scale up when we find something promising.

One such trial is of a new AI platform called Progressay, which claims that it can mark English Literature essays more accurately than examiners. As an English teacher I was initially sceptical. So much of the study of literature is about an understanding of human experience, an appreciation of beauty, an awareness of the nuance of language, and an imaginative grasp of irony and metaphor. AI aims to simulate human responses, but it cannot be moved by poetry. What use could it be for marking English Literature essays?

Three sets of essays that I assigned to my IGCSE group during their recent study of Othello were marked both by me and by the platform. I provided Progressay with anonymised copies of the group’s essays. I also sent the mark scheme, and a representative sample of my marks, without supporting justification.

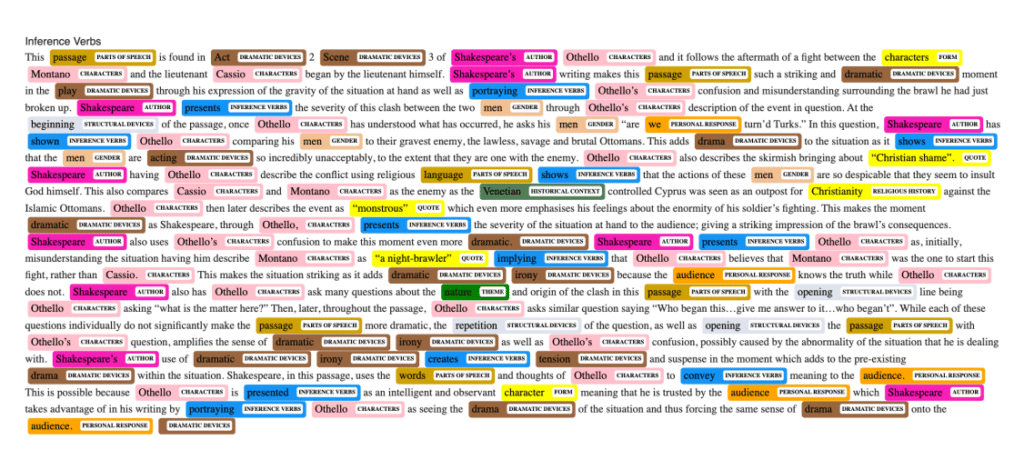

The boys were as interested as I was to see what Progressay came back with. We received whole-class data that analysed the components of the essays including quotation frequency and length, use of inference verbs, alternative interpretations, personal responses, thematic exploration, dramatic devices, figurative devices, structural devices, and historic context. Each was measured against the whole cohort data possessed by Progressay in the form of ‘What Went Well’ and ‘Even Better If’. Each boy received his essay similarly broken into its constituent parts (with paragraph breaks removed):

There were some errors in the analytics. For example, the verb ‘acts’ was labelled as a dramatic device; and a personal pronoun in a quotation was wrongly identified as a personal response. These can be overridden and the platform learns from these corrections. The boys also received detailed individual feedback, comparing the frequency of use of each component with the whole cohort. This breakdown – much more detailed and accurate than I could possibly provide by myself – was very useful.

The platform awarded marks by comparing those I had given to the sample essays with the components of those essays. A weakness of ‘teaching’ the platform this way is that it was learning to mark the essays using my own marking habits. While I am an experienced marker and (I like to think) a reliable one, any biases in my methods were replicated by the AI. The wider the data the platform acquires, the more such biases will disappear. It did a good job: the marks awarded by the platform across all the essays were on average within 1½ marks (out of 25) of my marks. It turns out that this is closer than between two experienced examiners.

The marks awarded by the platform across all the essays were on average within 1½ marks (out of 25) of my marks. It turns out that this is closer than between two experienced examiners.

I was surprised by this, because the platform was registering frequency of component parts rather than quality of insight or expression. The marking descriptors focus on both. For example, Assessment Objective 1 is to ‘show detailed knowledge of the content of literary texts, supported by reference to the text’. The platform can assess the level of detail and the number of references. Can it determine if those quotations are ‘well-selected’ (Band 7) or if they are incorporated ‘with skill and flair’ (Band 8)? Can it determine if the critical understanding on display shows ‘individuality and insight’ (Band 8)? Can it evaluate whether a ‘personal response’ is ‘perceptive’ or ‘convincing’? My scepticism about such questions remains, but I shall continue to trial the platform because it does provide very useful, granular information.

The fact that AI can perform certain cognitive functions much faster and more accurately than human beings suggests that we need to recognise what AI and human intelligence respectively are good at, and combine them elegantly.

[1] Luckin, R., Holmes, W., Griffiths, M. & Forcier, L. B., (2016). Intelligence Unleashed. An argument for AI in Education. London: Pearson